Elf: Enabling Approximate Video Query on Autonomous Cameras

Surveillance cameras are pervasive: many organizations run more than 200 cameras 24×7; 98 million network surveillance cameras are shipped globally in 2017; the UK alone has over 4 million CCTVs.

Autonomous Camera: extending the geo-frontier of video analytics

Today’s IoT cameras and their analytics mostly target urban and residential areas with ample resources, notably electricity supply and network bandwidth. Yet, video analytics has rich opportunities in more diverse environments where cameras are “off grid” and connected with highly constrained networks. These environments include construction sites, interstate highways, underdeveloped regions, and farms.

There, cameras must be autonomous. First, they must be energy independent. Lacking wired power supply, they typically operate on harvested energy, e.g., solar or wind. Second, they must be compute independent. On low-power wide-area network where bandwidth is low (e.g., tens of Kbps) or even intermittent, the cameras must execute video analytics on device and emit only concise summaries to the cloud.

Elf: a runtime for object counting on autonomous cameras

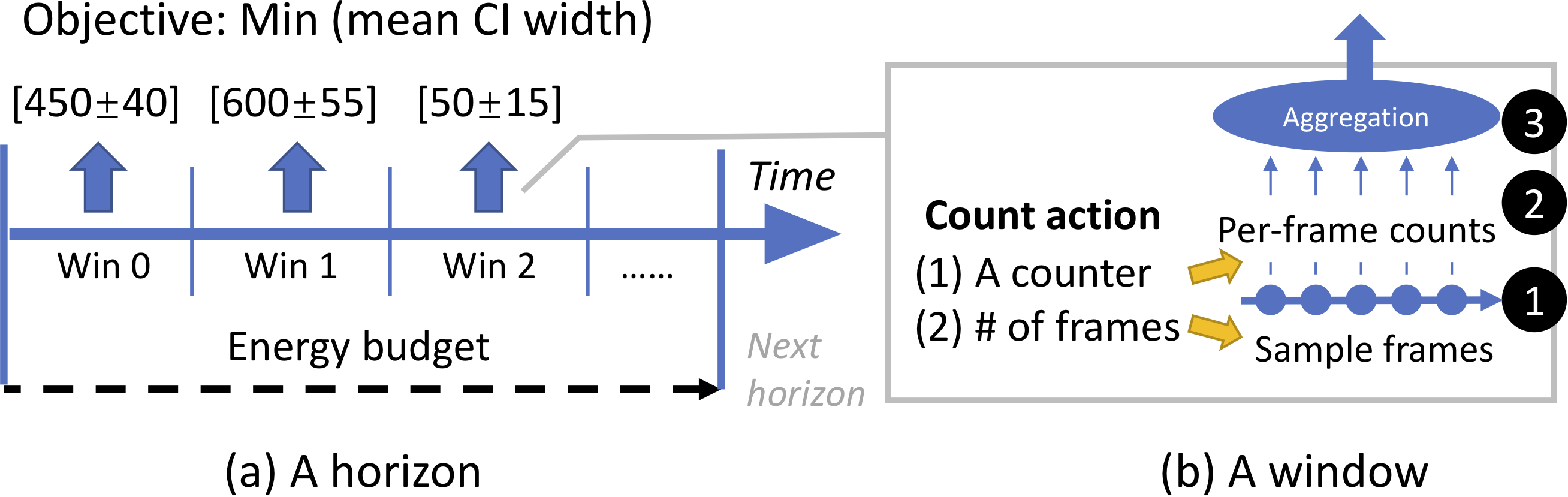

As an initial effort to support analytics on autonomous cameras, we build Elf for a key query type: object counting with bounded errors. Inexpensive IoT cameras produce large videos. To extract insights from videos, a common approach is to summarize a scene with object counts. This is shown in the Figure below: a summary consists of a stream of object counts, one count for each video timespan called an aggregation window. Object counting is already known vital in urban scenarios; the use cases include counting customers in retailing stores for better merchandise arrangement; counting audiences in sports events for avoiding crowd-related disasters. Beyond urban scenarios, object counting further enables rich analytics: along interstate highways, cameras estimate traffic from vehicle counts, cheaper than deploying inductive loop detector; on a large cattle farm, scattered cameras count cattle and therefore monitor their distribution, more cost-effective than livestock wearables; in the wilderness, cameras count animals to track their behaviors.

Elf’s novelty centers on planning the camera’s count actions under energy constraint. (1) Elf explores the rich action space spanned by the number of sample image frames and the choice of per-frame object counters; it unifies errors from both sources into one single bounded error. (2) To decide count actions at run time, Elf employs a learning-based planner, jointly optimizing for past and future videos without delaying result materialization.

Elf is the first software system executing video object counting under energy constraint. Our experiences make a case for advancing the geographic frontier of video analytics.

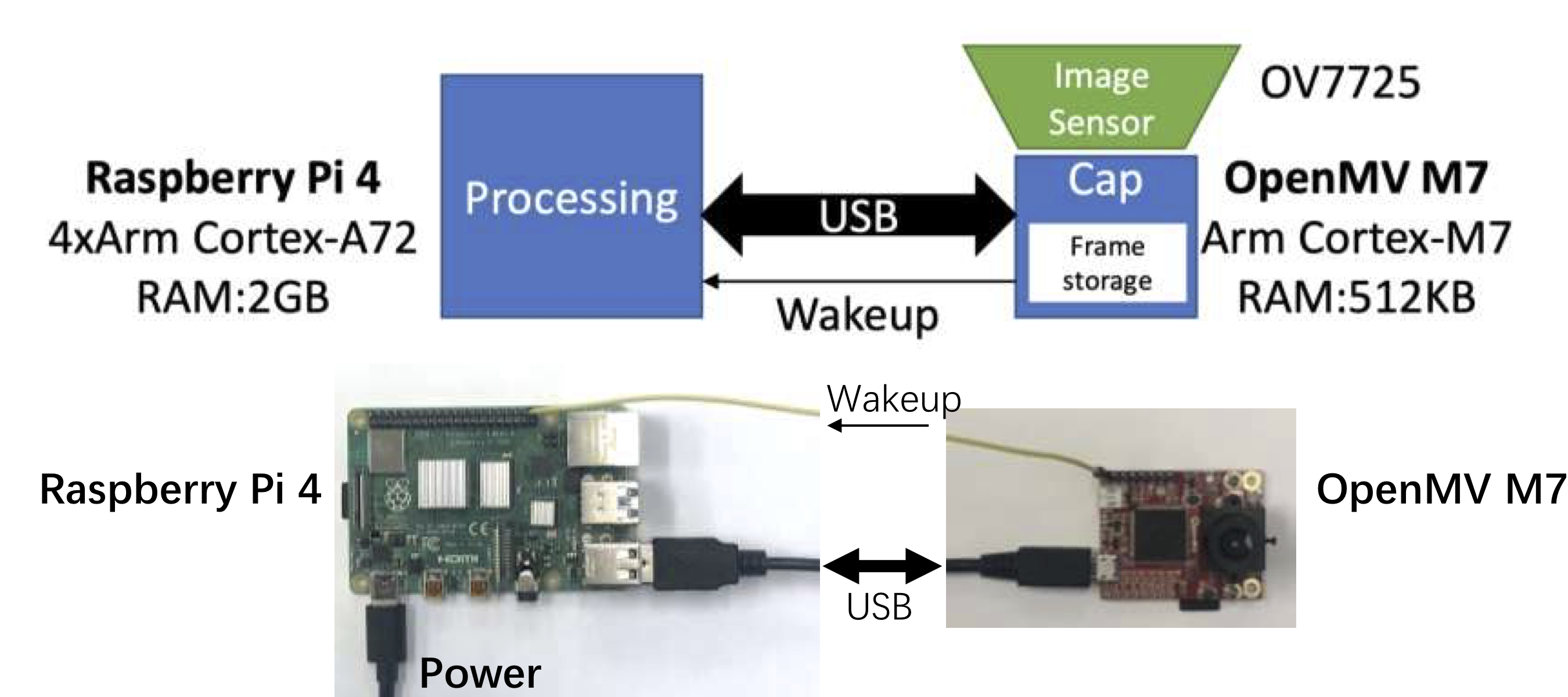

Commodity IoT cameras are often energy-inefficient at sampling sparse image frames: to capture one frame, the whole camera wakes up from deep sleep and falls back to sleep afterward, spending several seconds. We measured that the energy for capturing a frame is almost the same as the energy for processing the frame (YOLOv2 on Raspberry Pi 4). While the camera may defer processing images (e.g., until window end) for amortizing the wake-up energy cost, it cannot defer periodic frame capture.

To make periodic image capture efficient, we build a hardware prototype with a pair of heterogeneous processors, as shown above. The prototype includes one capture unit, a micro-controller running RTOS and capturing frames periodically with rapid wakeup/suspend; and one processing unit, an application processor running Linux and waking up only to execute NN counters.

Tested with more than 1,000 hours of videos and under realistic energy constraints, Elf continuously generates object counts within only 11% of the true counts on average. Alongside the counts, Elf presents narrow errors shown to be bounded and up to 3.4× smaller than competitive baselines.